Description

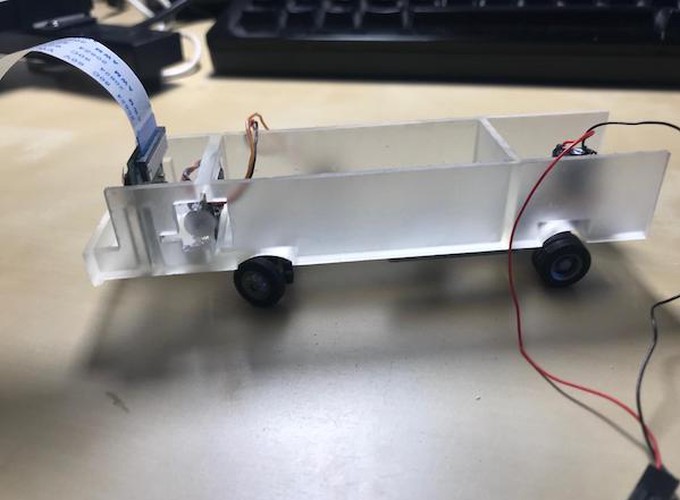

The autonomous truck platform in scale 1:87 will be equipped with a custom printed circuit board carrying a Xilinx Zynq 7020 FPGA. This FPGA provides enough computational power to implement machine learning methods, at least in a limited way. Planned sensors on the truck platform are a camera, multiple distance sensors Sharp GP2Y03E and an Inertial Measurement Unit (IMU) MPU-9255. For the computation of ML-based algorithms on mobile compact and power critical hardware an optimized hardware design is needed. While standard micro controllers have a very low power consumption and a high single-thread performance, their computational performance on parallel/SIMD tasks like needed in machine learning is rather poor.

A low-power FPGA with an optimized design can lead to a much better computational performance with an acceptable power consumption. To support different types of ML methods a general design is advantageous. One proposal was published by Google and called “Tensor Processing Unit” (TPU) (Jouppi et al., 2017). A comparable design on a Zynq FPGA was proposed by Fuhrmann (Fuhrmann, 2018) and tested on a commercial off-the-shelf board.

References

Tim Tiedemann, Jonas Fuhrmann, Sebastian Paulsen, Thorben Schnirpel, Nils Schönherr, Bettina Buth, and Stephan Pareigis. Miniature Autonomy as One Important Testing Means in the Development of Machine Learning Methods for Autonomous Driving: How ML-Based Autonomous Driving Could Be Realized on a 1:87 Scale. In Proceedings of the ICINCO 2019. SCITEPRESS. (accepted)

Stephan Pareigis, Tim Tiedemann, Jonas Fuhrmann, Sebastian Paulsen, Thorben Schnirpel, Nils Schönherr. Miniaturautonomie und Echtzeitsysteme. In Tagungsband Echtzeit 2019, Springer Lecture Notes. Springer. (accepted) Fuhrmann, J. (2018). Implementierung einer Tensor Processing Unit mit dem Fokus auf Embedded Systems und das Internet of Things. Bachelor Thesis.

Jouppi, N. P., Young, C., Patil, N., Patterson, D., Agrawal, G., Bajwa, R., Bates, S., Bhatia, S., Boden, N., Borchers, A., Boyle, R., Cantin, P., Chao, C., Clark, C., Coriell, J., Daley, M., Dau, M., Dean, J., Gelb, B., Ghaemmaghami, T. V., Gottipati, R., Gulland, W., Hagmann, R., Ho, C. R., Hogberg, D., Hu, J., Hundt, R., Hurt, D., Ibarz, J., Jaffey, A., Jaworski, A., Kaplan, A., Khaitan, H., Killebrew, D., Koch, A., Kumar, N., Lacy, S., Laudon, J., Law, J., Le, D., Leary, C., Liu, Z., Lucke, K., Lundin, A., MacKean, G., Maggiore, A., Mahony, M., Miller, K., Nagarajan, R., Narayanaswami, R., Ni, R., Nix, K., Norrie, T., Omernick, M., Penukonda, N., Phelps, A., Ross, J., Ross, M., Salek, A., Samadiani, E., Severn, C., Sizikov, G., Snelham, M., Souter, J., Steinberg, D., Swing, A., Tan, M., Thorson, G., Tian, B., Toma, H., Tuttle, E., Vasudevan, V., Walter, R., Wang, W., Wilcox, E., and Yoon, D. H. (2017). In-datacenter performance analysis of a tensor processing unit. In 2017 ACM/IEEE 44th Annual International Symposium on Computer Architecture (ISCA), pages 1–12.